by Craig Klugman, Ph.D.

A new year is a time for thinking about the past and looking forward to the future. Over winter break, I read Yuval Noah Harari’s book, Homo Deus, a futurist book that examines what the future of humanity may look like given our current technological trajectories. Reading the book was entertaining, and it also raised many questions of what the future of ethics and bioethics might hold if Harari’s predictions hold. To begin this new year then, I want to spend some time thinking about the [lack of] future of bioethics.

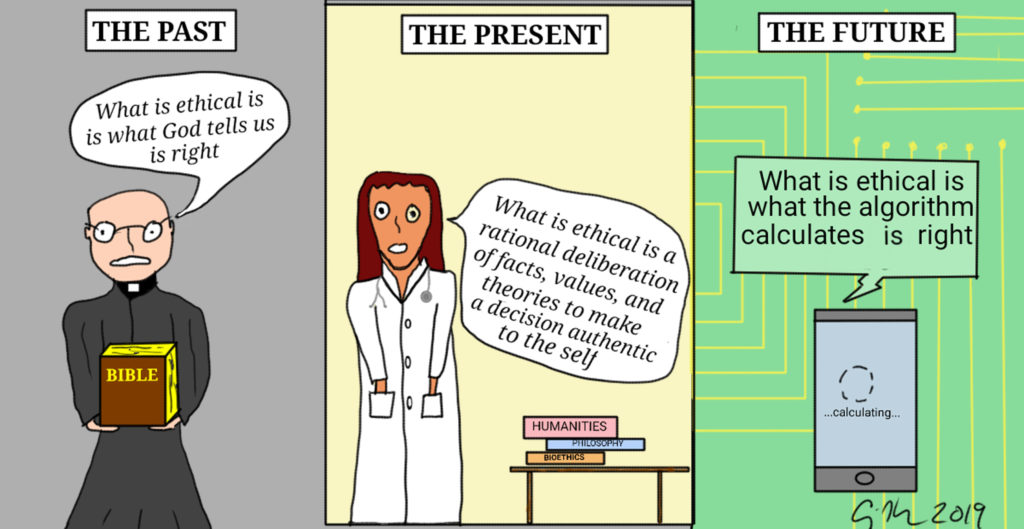

The starting point for this discussion is to consider the sources or our moral and ethical authority in our past, present and future.

The Past

In the past, most sources of morality derived from religious authority, whether directly from a deity or via clergy or ecclesiastical bureaucracy. For the Abrahamic faiths, that means the source of ethics was a Bible, Torah, Mishnah, or Koran as these works were interpreted by religious leaders. Right and wrong were presented to the people as pre-defined truths external to ourselves. Lying is wrong because the priest said so at mass. To violate this precept meant threatening an eternal soul to damnation.

The Present

The present has been defined by the Enlightenment that placed priority in humanism and individualism. In this notion of the person, individuals determine right and wrong for themselves by relying on the older religious systems or by following their moral intuition. Harari says “A new formula for acquiring ethical knowledge appeared: Knowledge=Experiences x Sensitivity. If we wish to know the answer to any ethical question, we need to connect our inner experiences, and observe them with the utmost sensitivity.” Right and wrong comes from within the individual, shaped by their learning, community, family, and [sometimes] faith. With no external punishment and reward, we make ethical choices so that we can be good people living in alignment with our values and ideals (think of the purpose of advance directives—to make sure that others make the choices we would have, even if those are not in our best interest, or the best interest of others).

Bioethics is a modern movement in that has been an attempt to find ways of thinking about right and wrong that is often apart from the religious systems and relies on more than just personal opinion and feeling. We rely on moral philosophical theories and logic, bridging the gap between external and internal sources of morality. What is right is a narrative that provides meaning. For example, in clinical ethics, we work with people to help them make their own choices. Meaning, like choices, comes from our inner world by accessing and understanding the authentic self.

The Future?

According to Harari, the latest thinking in biology and computer science is that humans are no longer defined as individuals or as creations of deities, but rather as (1) organic algorithms to process information and (2) as sources of data for more efficient and faster computer algorithms. Viewing biological organisms as algorithms means they lack souls and even free will, but process data on the basis of their genes and environment. For example, neuroscience has suggested that we have a moral decision-making center of the brai n(the ventral posteromedial cortex or vPMC). How we make decisions may be based less on knowing our authentic self, studying theories, pleasing a deity, or making “rational” choices but rather are a brain reflex mediated by our genes and environment.

If our choices and actions are a result of our data processing abilities, then artificial algorithms (i.e. powerful computers) are better at doing this work than we are. In the same way that I use my cell phone to keep track of my calendar and phone numbers, an external algorithm can learn to know me better than I know myself. The algorithm can also take into account the greater community, other people, and a larger perspective, something that often challenges humans. For example, in the Netflix show Travelers, teams of operatives are sent from the future to our current time in order to nudge humanity down paths that lead to a more hopeful future (we never see their future but we know that the Earth is devastated and humanity on the brink of extinction). This whole project is run by an intelligent algorithm called “The Director” which gives directives and most everyone follows them, without question, because the director knows best for humanity, the planet, and even for you. The result then, is a future in which right and wrong is not an individual choice but is defined in an algorithm and your duty is to follow that algorithm.

To translate this idea into the effects for bioethics I will explore two futures—one near and one further away. In the immediate future, algorithms assist health care providers in diagnosing disease and in recommending treatment. In fact, this is already happening: Intelligent programs efficiently schedule ORs, accurately read medical scans, and choose optimal cancer treatments for an individual. In the world of ethics, then, rather than having to call an ethics consult and bring together a meeting of 40 or so people serving on the ethics committee, an expert ethics system will simply know the “ethical” choice to make. This is the same decision that the clinical ethicists would recommend after reading the chart, talking with the key stakeholders, holding a family meeting, researching the literature, analyzing the issues, and writing a chart note, but the intelligent system will make the recommendation in seconds.

For a real life example of this algorithmic ethics, let’s consider the autonomous car. The car must make many ethical decisions such as whether to give primary value to protecting passengers or people outside the car. For example, if a pedestrian is crossing the street against the light and the car would have to stop short, skidding on ice and slamming into a concrete wall, should the car prioritize passengers (i.e. run over the pedestrians) or value the pedestrians (risk injury to passengers and to itself)? Currently the ethical algorithms are constructed by committees (few work with people trained in ethics) and the results of scenario discussions are written into coded algorithms by programmers. Thus, the job of the near future ethicist is not to help people to make their own decisions, but to help the coders develop the algorithms that will make the choices for us. Again, since the programs know us better than we know ourselves, these are the best possible choices that we would choose for ourselves.

In the further future, we will have algorithms created by machine learning rather than by human programmers. This technology exists today. The amount of data in the world is so massive and the processing demands so great, that humans can’t even formulate the questions such data could answer. The machines are given data and then determine their own rules. If the database is concerning cancer treatments, the machine may correlate DNA profiles with outcomes and treatments and quickly develop rules to maximize good outcomes that certain treatments work best for certain genetic profiles or even people who have been exposed to certain toxins. The machines write their own algorithms (rules). In this vision, the “right” choice is that which provides more data to the algorithms and the “right” action is to follow the guidance of the algorithm which will know us better than we know ourselves, will be more efficient, and will have a greater understanding of societies and science than any person ever could. We just won’t follow the ethics algorithms, we will want to follow them because the decisions will align with our authentic self—these are the choices we likely would have made on our own anyway. Once the ethics algorithm develops, bioethicists as we know them are out of a job: One can pick up a mobile phone ethics app and the machine will give the best possible action. Morality becomes a computer problem rather than an internal deliberation.

Continuing the advance directive example, such documents would be of limited usefulness because the algorithm makes the choice based on the medical facts, use of resources, knowledge of our values, and how we would each make decisions. The algorithm will know us better than our family, friends, physicians, or health care surrogate.

An ethics based on analyzing how people behave and choose does violate Hume’s is/ought problem. Bioethics has been struggling with this itself: That what we ought to do is not necessarily reflected by what we actually do. Back to the autonomous car, an observation would be that many cars speed up when they get to a yellow light. But the official rule is that you stop at the light if you can. The human written algorithm would have an autonomous car stop at yellow lights if it is safe. But a machine written algorithm would see that many cars speed through yellow lights because that is more efficient (uses less fuel than stopping and keeps traffic moving) and develop an ought statement based on that observation. Even in bioethics, much of the cutting edge work is not being done using philosophy but in using empirical methods—examining how people make choices in the real world. An advanced ethics algorithm would, ironically, develop its oughtsfrom the data, a.k.a.what is.

In this vision of the future then, autonomy is highly problematic because the idea of self-governance that serves only a single individual (rather than a larger world-wide perspective) would be unethical. In addition, autonomous decisions are affected by our feelings and internal dialogue, which are often inconsistent. An ethics algorithm removes the risk that one makes a choice just to “cut off your nose to spite your face”. Moral choices would be removed from emotion, but not from values.

One substantial change would be in the area of privacy. In the present, under humanism and individualism, privacy is a primary value. In a society run by algorithms that diagnose and cure disease, efficiently arrange commuting to and from work, and even help us choose what we really want for dinner, sharing information is key. We gain an obligation to share our information with the algorithm to allow it to become better. For example, I have written critically about projects ignoring privacy because they used large amounts of patient data to learn more about diseases. Under our present view of humanity and ethics, these efforts violate my privacy and confidentiality. But in an algorithmic view of humanity, by insisting on privacy, I prevent access to my information that could be used to benefit others. Thus privacy becomes a “wrong” and sharing on social media, through wearable devices, via my medical records, and even through our internet-connected home becomes “right”. Those who follow the idea of the quantified self have already bought into this vision of a human future. This trend suggests that the value of data for everyone outweighs my [outdated notion of an] individual right to privacy.

Many of you may be thinking that this is nonsense and that ethics is a system of rational thought based on knowledge, reflection, experience, and application. Making an ethical choice is an intellectual practice of autonomy and free will, which are some of the foundational premises of our practice. But much new empirical data suggests that our decisions are more influenced by our genes and environment than we realized. In fact, our sense of exercising free will might simple be a result of being attuned to our own algorithmic thinking. Still doubt? Consider this recent study: Your ability to make ethical decisions varies by the time of day. If you are genetically programmed to be a morning person you make better choices in the morning than later in the day and vice-versa for the night owl. Why would a truly free will differ by my genetic programming for alertness? This empirical fact severely challenges the humanist ideal.

A future bioethics shaped by new thinking about humans based in biology and computer science might find bioethicists superfluous. The intelligent algorithm will make choices that we follow. Ironically, this future looks a lot like the past, where an external deity determines right and wrong and we follow. In the past, we followed out of fear of estrangement from the community or eternal punishment. In the future, we follow because we know the system is much better at making choices than we are. I suggest that this does not diminish our idea of humanity and the self, but changes it, offering a future that has less emphasis on the individual and more on the community (world?) as a whole. Thus, the golden age of bioethics in which we currently find ourselves may be but a blink in human history. Where I am remain uncertain is whether we should remove the trappings of bioethics that have us question these scientific advancements, as many of us do in criticizing new technologies (think about the backlash with He’s announcement of genetic engineering of humans), or whether we should continue to criticize and resist these changes to prevent a future where a machine occupies the prime place in the universe?