by Craig Klugman, Ph.D.

“Mental illness and hatred pulls the trigger, not the gun” – President Trump, 4 August 2019

In 2019 (as of September 13), there have been 297 “mass shootings” (4 or more people killed or injured) in the United States resulting in 326 deaths and 1,229 injuries. The reason, according to Trump’s administration is untreated mental illness. According to many mental health specialists, lots of people with mental illness never injure another person and many of the shooters have not been diagnosed with a mental illness. People have mental illnesses in other countries and yet those places do not have a mass shooting problem. Alas, finding someone or something to blame for the epidemic of mass shootings in the U.S. is easier than having to face the political reality that the problem is rooted in the easy availability of guns and structural poverty in the United States.

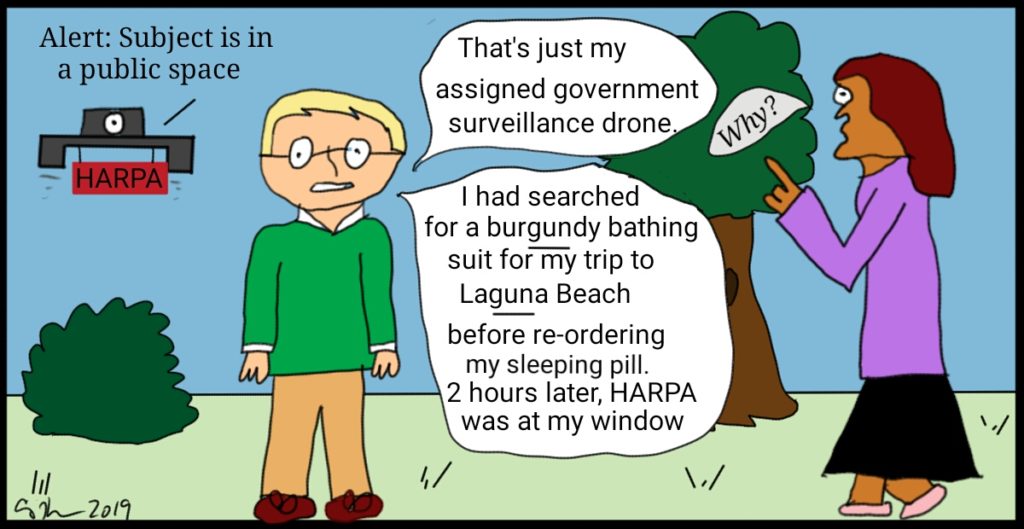

In response, the Trump administration has proposed a solution: Figuring out who has mental illness and, by their logic, is at risk of shooting others. They plan to do this through large scale surveillance of the general population. The idea is that by monitoring people’s behaviors and postings through mobile phones, smartwatches and social media postings, intelligent algorithms would flag people with mental illness who are about to violently harm others.

How would this be accomplished?

You may have heard of DARPA, the Defense Advanced Research Project Agency, that is a center of technological innovation. When I was a college student in the late 1980s in California, being at a university where I could use a computer to instantly write to my friends who attended our state school back in New Jersey was amazing. This new technology was part of DARPANet, a network that connected computers at many large research universities, and was the backbone of the modern internet. The fact that I can write this article on a computer, save it on cloud storage, and post it on a website all from the countertop in my kitchen is thanks to DARPA.

A proposal to create a similar program to create new medical technologies is called HARPA, Health Advanced Research Projects Agency, and is described as DARPA for health. The goal is to harness data (i.e. observations and measurements of people’s health status and behavior) through technology (think smartwatches, social media postings, fitness trackers) and use an AI to sort through the data to find new cures to disease. Within that effort would be “Safe Home”– Stopping Aberrant Fatal Events by Helping Overcome Mental Extremes—which would monitor people with mental illness who are likely to become violent. The project would cost $40-$60 million and be based on technology the military already uses to identify behavior in soldiers which might make them a “possible threat” to their peers.

Filling the Swamp

What is unusual about HARPA is that the idea and the current proposal comes not from the federal government, but from the private, non-profit Suzanne Wright Foundation. This organization was founded by Bob Wright in 2016 upon his wife’s death from pancreatic cancer. Bob Wright was a lawyer for GE, a federal judge, and later president of Cox Broadcasting and NBC Universal. Together, the Wrights founded Autism Speaks after one of their grandchildren had been diagnosed with the condition.

Wright’s proposal for HARPA is that it become a federal program within the Department of Health and Human Services but that it works with other agencies and have “autonomy and flexibility”. Think of it as a private company inside the federal government. A HARPA website talks about collaborating with federal agencies, universities, private companies, and venture capitalists. It would not work on the grant system, but instead award “contracts” and its directors would have unchecked authority. This proposal begs the question of what oversight would exist for this funneling of public dollars into private purses? Who would own the collected data (the patients, the federal government, the private interests)? And more.

Ethically Murky

Mental illness is not the cause of mass shootings. Thus, this solution would not solve the problem. What if would do is funnel federal dollars into private pockets. Bob Wright is a long-time supporter of Trump having greenlighted Trump’s The Apprentice Program on NBC and was an early cheerleader of Trump’s presidential campaign. Besides the murky political ethics issues of lack of transparency, nepotism, and financial conflicts of interests, the government taking people’s health-related information to surveil them raises a number of bioethics concerns. According to the HARPA website, participation in the program would be voluntary. But if the plan is voluntary, then how would it catch people who might go on violent shooting sprees? Do supporters think people considering mass shootings will sign up for a government surveillance plan? Or perhaps permission to collect data is part of purchasing a gun? What is the proven scientific basis that this approach might provide a modicum of effectiveness). The solution really does not deal with the problem.

HARPA would be like a vacuum cleaner, sucking up your digital health data which the federal government would then give away to private contractors. Such contractors can then do anything they want with your information including making a healthy profit for themselves and putting your privacy at risk. After all, while a doctor has a fiduciary responsibility to act only in your best interest (which is why we share our private information with them), the federal government and certainly private contractors have no such obligations. They can sell your information to insurance companies (looks like Sally might be showing signs of heart trouble, better exclude that from her health insurance); or Bob isn’t exercising (time to raise his life insurance premiums), your employer (fire them before they come down with that chronic disease), drug companies (sugar seems high, better send coupons for diabetes medications), and more.

Proponents of Safe Home say that they would not collect “sensitive health information” and would be cognizant of privacy issues. But the goal of the program is to identify individuals who might be violent—that can’t happen using anonymized or aggregate data.

Other issues include who would be responsible for following up with identified potential shooters? Will everyone with mental illness receive a visit from law enforcement? Be arrested? Or will they receive compassionate mental health care? Given our history, the latter seems unlikely. The system also holds a highly likelihood of false positives. For example, I did a lot of online searching on mass shootings for this article—under Safe Home I could expect a knock on my door. This proposal would increase stigma against mental illness and push people into hiding.

There is a fine line between surveilling people to prevent mass shootings, to collect data to cure disease, and keeping an eye on your political opponents. After all, who defines what are potential risk factors toward violence? Who defines violence? The needle seems like it could easily move to tracking people who say things against the government.

There are easier solutions to the problem of mass shootings than setting up a high-tech, AI-guided, surveillance state to find the very few who are potential shooters. The answer is to use science, epidemiology and the experiences of other countries. When Australia had a mass shooting they made use, sale and possession of some firearms illegal. They had one mass shooting in 2019. The Australian Medical Association is backing a real-time gun registry. When New Zealand had a mass shooting in 2019, within days they passed laws restricting ownership of firearms including a buyback and are discussing new proposal for a firearms registry. The Gifford Law Center recommends mandating trigger locks and storage requirements (unloaded and locked in a safe).

The answer is controlling firearms, not controlling people. The answer is valuing people over money. The answer is finally acting about guns instead of just offering hopes and prayers.