by Craig Klugman, Ph.D.

As you may have heard in the news, Cambridge Analytica is a big data company that “uses data to change audience behavior.” As they say on their website, “We find your voters and move them to action.” Allegedly, the company promised Republicans that they could influence the outcome of elections. The only problem is that they needed a large amount of personal data to do their work. The company that has one of the largest troves of data on the personal lives of over 2.2 billion people is Facebook. Getting access to the data was simple: A third party company wrote and distributed a program that invited users to take a brief personality test. In the process of taking the quiz, the user gave the company permission to have their personal information captured, as well as that of everyone on their friend’s list. Although only 270,000 people took the quiz, the program quickly grabbed the information of 50 million Facebook users.

We handle our phones 2,617 times per day, on average. We look at Facebook, on average, 14 times per day and spend 30 minutes of each day on that one site. Consider that a few years ago, researchers posited that Google was more effective at predicting flu trends than the CDC. By tracking search terms related to the flu, Google could see where and who was being infected. This effort turned out not to work well, but others have been improving the concept to use such big data to better predict flu patterns.

Through the use of big data, it is possible to find trends, connections, and even ways to manipulate a populace to act in certain ways. This process is called digital phenotyping, which attempts to assess information about you based on your online behavior. The results can be used to build psychographic profiles of people for elections, or to learn about your health status. The same trend that has led to looking at big data to predict and manipulate voting is also being used in the world of health and medicine.

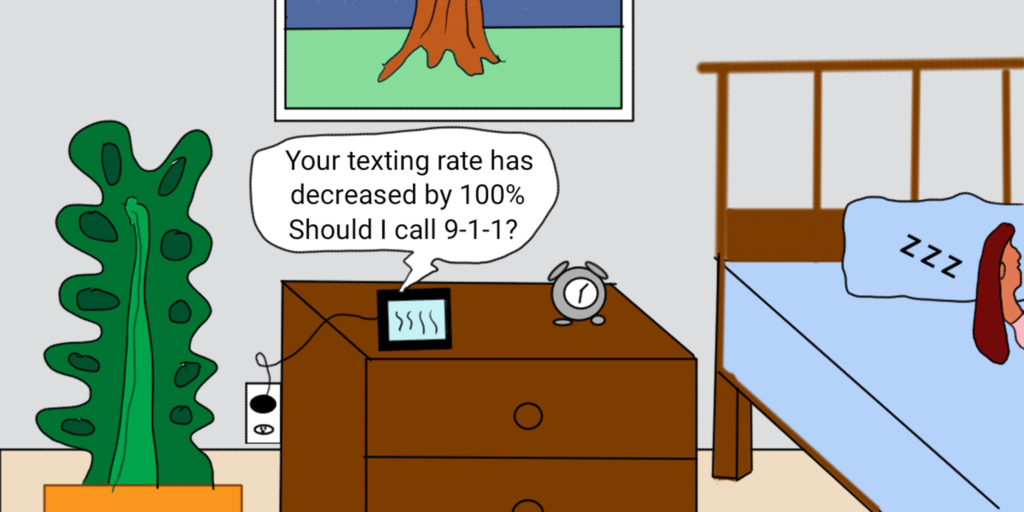

Facebook has an artificial intelligence algorithm that scans activities on its platform to detect signs of suicidal thinking. The AI examines feeds for signs of friends reaching out to help or with concern, such as posting “Are you okay?” When the AI detects content and patterns that may indicate suicidal thoughts, it notifies a team of mental illness specialists who can reach out to the user or let their friends know the person may be in trouble. Facebook can also contact local resources with the name and location of the flagged user: ‘The company said that, over a month, its response team had worked with emergency workers more than 100 times.”

Another startup is looking for changes in one’s smartphone use behavior to predict health status. If your normal typing speed on your phone is 41 words per minute, but over a few months that slips to 30, this change could be an indication that you are experiencing neurological changes. MindStrong Health is looking at how we use our phones to determine when our health may be changing. According to the company, cell phone use can indicate changes in sensory input, visual memory, verbal fluency, and processing speed as well as measuring emotions and stress by analyzing your voice and speech. An AI can examine your use patterns over time and flag any changes, which could be an indicator of a neuropsychiatric problem.

The obvious ethical concern with this use of big data is privacy and consent. We have stringent rules for how to handle private health information. But what happens when every motion is potential health information? If your typing speed can be an indication of a neurological disease, or the stress patterns in your voice indicates a health concern, is that information now protected? Most likely not under current statute. Our laws need to catch up with the technology. Although you can tweak your privacy settings on social media, the Cambridge Analytica case shows that privacy cannot be guaranteed online—no matter what efforts one may make. In medicine, the health care provider must preserve your confidence because the physician-patient relationship is a fiduciary one. There is no such relationship with your cell or social media provider. What you have with them is a terms of use agreement, i.e. a contract, which gives them more rights than it gives you.

When you signed up for your phone, you probably did not consent to having someone track every movement, every motion, and every touch on your device. In medical and research consent, you need to be told the risks, benefits, process, alternatives and how your information will be used. With these technologies, though, you are not told any of these things. Even if you agreed to some tracking of your online activity or mobile phone use, you were probably not informed that it could be used to determine if you have health problems and that such behaviors could be reported to authorities, friends, your insurance company, and even your local hospital system (once they purchase these services).

The unintended consequence of this constant surveillance and grading of our health may be increased stress over wondering how are data is used. Bentham suggested that a society of constant surveillance would lead to a more ethical culture—people would always do good because they never knew when they might be caught. In this case, however, the goal is to survey signs and symptoms over which we have no control. the situation is more akin to Hobbes’s state of nature where we live in diffidence—constant distrust and suspicion that everyone is always out to get us, use us, or gain an advantage over us.

Many may welcome these unobtrusive methods of tracking our health. Early detection of a neurological disorder could lead to early treatment or delaying the worsening of symptoms. What this technology notices are the small lapses that we can easily ignore or explain away to ourselves. If an adult is worried about their parent’s well-being, could they sign up for this service so they know when mom is “losing it”? For patients with family history of a disease or a genetic marker for the condition, such a service could bring peace of mind—their phone tells them when they are having problems. Knowing someone is watching over you or your parent, could provide a piece of mind instead of analyzing every instance of losing one’s keys or forgetting a phone number as a sign of decline.

If there are benefits, they will not be shared evenly. Smartphones are expensive with the newest ones running $1,000. Smartphone ownership is directly correlated with socioeconomic status: The higher your income, the more likely you are to have a smartphone.

The question is whether the cost for such benefits from digital phenotyping–notification of an early-stage disease or being part of a social media community–is worth the price of losing privacy by living under constant surveillance. If these health-related services succeed, we may have to answer this question in the near future.